Here is something that I just learned yesterday that I didn’t know. This was one of those fun things where I knew every piece of the puzzle, but had never “realized” or “made the connection” between all of them.

If you aren’t sure what extension methods are, I wrote a blog post about them back in 2008 that you can check out here.

Here is an example for today:

public static class ExtensionMethods

{

public static bool IsEmptyStringArray(this string[] input)

{

if (input == null) return true;

return !input.Any();

}

}

What I’ve done is just create a method that allows you to call .IsEmptyStringArray() on any string array to find out if it has any items in it. I realize that this is a fairly useless example, but it is contrived for the sake of the demonstration.

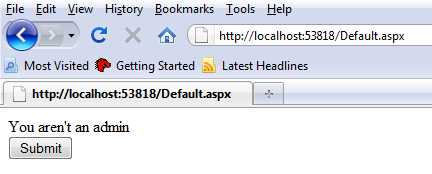

Now, if I call a “framework” method on a null string array, I get an error. So, doing something like this:

string[] nullArray = null; var hasItems = nullArray.Any();

Results in the error “Unhandled Exception: System.ArgumentNullException: Value cannot be null.”

However, I *CAN* call my extension method on that null array.

string[] nullArray = null; var hasItems = !nullArray.IsEmptyStringArray(); Console.WriteLine(hasItems);

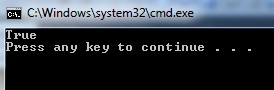

This code produces the following result:

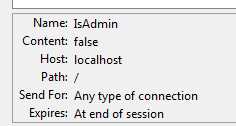

How does that work? This is what I had neglected to put together in my mind. When you write an extension method, what actually gets compiled is this:

call bool CodeSandbox2010.ExtensionMethods::IsEmptyStringArray(string[])

The “syntactic sugar” part is that you aren’t actually calling a method on the null object at all. You are just calling your method and passing in the parameter, just like any other method. I really like that because it gives you a concise way to write your code without the same null check over and over and over again throughout your codebase. You can just check in the method and then get on with what you’re doing.