Recently, I was trying to throw a quick method on a controller to create a user on the fly. I was pretty new into a .Net Core Web API project and I just needed to add a quick user to start to test some of the authenticated API calls that I was creating. So, I wrote code that was very similar to this:

Recently, I was trying to throw a quick method on a controller to create a user on the fly. I was pretty new into a .Net Core Web API project and I just needed to add a quick user to start to test some of the authenticated API calls that I was creating. So, I wrote code that was very similar to this:

[HttpGet("~/peteonsoftware")]

public async void CreateUser()

{

var user = new ApplicationUser()

{

Email = "me@myserver.com",

UserName = "me@myserver.com"

};

var result = await _userManager.CreateAsync(user, "SomePasswordThatIUsed,YouKnowHowItIs...");

}

When I started it up and made a call to http://localhost:56617/peteonsoftware, I got an error that read:

System.ObjectDisposedException occurred HResult=0x80131622 Message=Cannot access a disposed object. A common cause of this error is disposing a context that was resolved from dependency injection and then later trying to use the same context instance elsewhere in your application. This may occur if you are calling Dispose() on the context, or wrapping the context in a using statement. If you are using dependency injection, you should let the dependency injection container take care of disposing context instances.

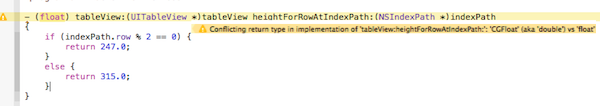

I could not figure this out. I would debug and stop on the line. My dependency injection was working and my _userManager was populated via dependency injection and I reviewed documentation and what I was calling should work. I lost a lot of time to this. It turns out that I was the victim of some optimization that .Net is doing for me. Notice that my method is async. Notice also that my method returns void. So, as far as .Net is concerned, it can go ahead and return from my method as soon as it fires off that CreateAsync() call because it doesn’t care about the result and it isn’t returning anything anyway.

I naively thought that my await would keep everything holding on until the method returned. NOPE! I found through research that my method was returning before it was done and everything started cleaning up and disposing. Therefore, when the method went to work, everything was disposed, resulting in my error. When I made the small change of no longer returning void, everything worked.

[HttpGet("~/peteonsoftware")]

public async Task CreateUser()

{

var user = new ApplicationUser()

{

Email = "me@myserver.com",

UserName = "me@myserver.com"

};

var result = await _userManager.CreateAsync(user, "SomePasswordThatIUsed,YouKnowHowItIs...");

// Yes, I know I could just return above, but I like it to be separate sometimes, don't judge me!

return result;

}

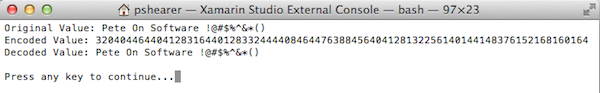

Now when I call, I get back

{"succeeded":true,"errors":[]}

So, lesson to learn… async void is BAD! Lots of unexpected consequences. Always return something from an async method to ensure that nothing gets cleaned up early.